May 2, 2025 | Global Equity

The AI Opportunity: Investing Billions, Impacting Trillions

May 2, 2025 | Global Equity

Want the latest insights on the economy and other forces shaping the investment landscape?

Subscribe to our Investing Insights newsletter.

Investing has long balanced art and science, blending ambition with caution. That duality is particularly acute when investing in the artificial intelligence (AI) ecosystem, where billions of dollars drive rapid developments that merit both optimism and rigorous scrutiny.

In part one of this new series, AI Alpha unveils AI’s promise; in part two, it tempers that optimism with critical inquiry. The key questions are whether AI will deliver on its financial promises, and who will ultimately capture these returns—the corporations or the consumers? Outcomes will vary by company, but we contend that today’s multibillion-dollar investments will translate into trillions of dollars of value over time.

The rise of AI marks the dawn of a new era. To navigate tomorrow’s technological landscape, investors must understand its underlying nature and remember the truism that hardware and software are complementary rather than relying on superficial impressions.

Consider how the early pioneers of semiconductor transistors and the internet, once dismissed as niche tools for academia or military research, perceived a deeper truth: when compute grew ever cheaper, information could be processed with unprecedented speed and efficiency, and when communication costs plummeted, the dormant channels of global discourse burst into life. These were not mere random events but inevitable outcomes of fundamental shifts in physics and economics. By embracing a first-principles approach, one can discern the trajectory of innovation - thus identifying long-term growth opportunities rather than ephemeral trends.

The shift from deterministic algorithms to their probabilistic counterparts mirrors the intellectual leap from Newtonian physics to the 20th century probabilistic frameworks of quantum mechanics and complexity theory.

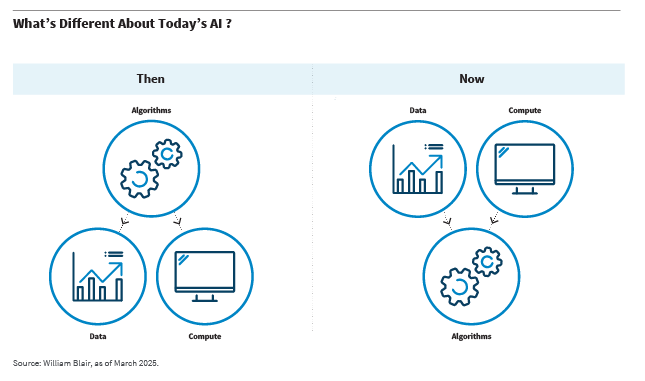

What is it about AI’s core design that naturally produces transformative outcomes? Though a future blog will explore this in detail, consider that since the birth of the modern computer industry in its von-Neumann architecture format (1940s), software engineers have crafted systems in which algorithms, like master craftsmen, orchestrated data and compute into a precise, predetermined mechanism.

However, in the current paradigm, transformer-based models on graphics processing units (GPUs) have turned that mechanism inside out. If the algorithms serve as the gears of a clock, then the growth in data, driven by the internet, and compute, driven by semiconductor technology, are no longer inert components but the very hands that set the gears in motion.

Reversing the roles of data, compute, and algorithms is more than a semantic shift of algorithms from “software” to “AI”; it is a fulcrum upon which the landscape of technology pivots, a monumental leap that has the potential to redefine the very contours of progress. Traditional software is deterministic, executing precise instructions. In contrast, AI digests orders of magnitude more data at scale, continuously adjusting itself to deliver the most likely outcomes despite the complexities and nuances of the data it encounters. This iterative refinement makes it probabilistic. AI is then a model, a faithful replica, of our inherently intricate real world, capturing and mirroring patterns from vast expanses of data, with a capacity for complexity and intellect that often surpasses the confines of the human mind.

The shift from deterministic algorithms to their probabilistic counterparts mirrors the intellectual leap from Newtonian physics to the 20th century probabilistic frameworks of quantum mechanics and complexity theory, where uncertainty and emergent phenomena play a central role. This evolution reflects a broader intellectual journey from a strictly cause-and-effect worldview to one that embraces the inherent ambiguities and unpredictabilities of the natural world—a transformation that geniuses such as Ray Solomonoff, in his 1950s wisdom, recognized as the true destiny of algorithms. Now, with the explosion of data and leaps in computational power, the world has finally caught up to his prescient vision.

Today’s AI, rooted in its essential probabilistic nature yet reaching far beyond, stands as a discerning decision-maker—modeling the real world through high-quality input-output processing and digesting data on an unmatched scale. At the same time, it serves as a powerful tool that fuels experimentation, transforming trial and error into a catalyst for revolutionary research and development (R&D), accelerating cycles of trial, error, refinement, and ultimately discovery. With its expanding capabilities and rapidly falling costs, AI has the potential to both enhance and replace human judgment, transform R&D, and redefine software. With vast sums already funneled to labor, R&D, and software, I believe AI's impact can potentially reach into the trillions of dollars.

Let’s explore how we get to that number by looking at each category in more detail.

Until now, the human mind orchestrated the complex tasks that propelled economic activity. Today, however, AI emerges as a new force, also adept at modeling the world and exhibiting intelligence, reshaping the winner-take-all dynamics of economic labor.

AI excels at routine tasks by relying on inductive reasoning to extract probabilistic patterns from vast datasets, while the human mind retains an edge in deductive reasoning, dissecting novel problems through logical analysis. The former thrives on correlation, the latter on causation. An equity analyst who depends solely on pattern recognition—ingesting news, podcasts, and research reports—risks being outperformed by the more powerful AI, whereas one who applies mental models to understand causation can navigate novel scenarios more effectively where the AI finds the complexity difficult to interpret. This clear division of labor highlights that while AI is adept at replicating routine tasks, human reasoning remains essential for addressing unique challenges.

An equity analyst who depends solely on pattern recognition risks being outperformed by the more powerful AI.

Many roles once dominated by human effort are increasingly susceptible to disruption as AI assumes tasks that once required extensive manual processing, particularly since many people are employed to manage the repetition of the daily economic machinery, providing a vast arena for the application of inductive AI. Even the supposedly difficult-to-reach areas are being challenged. In industries as heavily regulated as healthcare and insurance, AI has already demonstrated its capacity to reduce processes taking 15 weeks and 50 experts to less than 10 minutes and 3 experts[1] and a quarter of all computer programming jobs have already vanished.[2]

AI’s role is twofold, as it may either complement human labor by enhancing efficiency or replace it entirely when tasks are routine. The relentless logic of capitalism suggests that companies leveraging AI’s advantages—its efficiency, cost-effectiveness, and capacity for continuous improvement—are likely to outcompete those clinging to outdated methods, driving a transformation of labor demand. In the true spirit of Joseph Schumpeter’s creative destruction, firms must disrupt their own labor practices from within, for if they fail to do so the market will impose change upon them, replacing inertia with innovation.

Getting to the Trillions

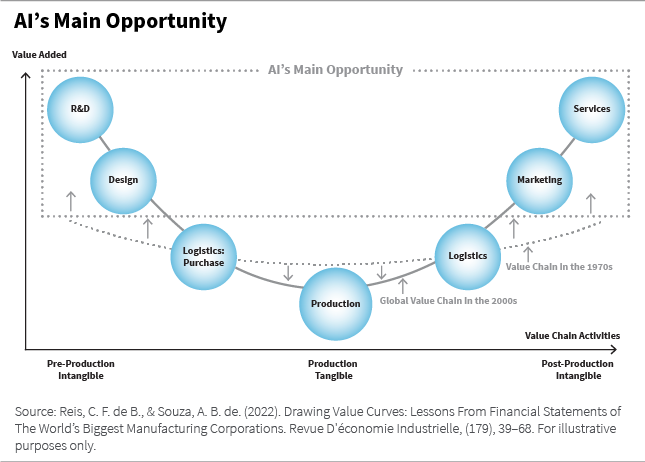

Through globalization, developed countries have retained high-value-added, intangible services domestically while outsourcing lower-value tasks such as manufacturing, thereby creating a vulnerability.

Global labor spending likely exceeds $50 trillion annually.[3] In the United States alone, $10 trillion is devoted to wages, of which $7 to $8 trillion funds service- and knowledge-based activities—research, design, marketing, finance, and education—which are areas naturally suited to AI’s data-processing and pattern-recognition capabilities. This enables AI to assume and augment tasks once the exclusive province of human effort.

As an example, in sectors such as legal, financial services, and healthcare, the reduced cost and increased digital accessibility of AI make it significantly easier for everyone to obtain legal, financial, and health information. Consequently, providers in these fields must adapt by specializing and offering premium services or face displacement by AI. This creates a dual transformation: while the labor force is reshaped, customers benefit from lower costs unlocking democratized access to information.

Even within traditional areas such as manufacturing, although it accounts for only 10% to 11% of U.S. gross domestic product (GDP),[4] a significant portion of its activity is high-value innovation. The nation allocates roughly 3.5% of GDP to R&D,[5] and manufacturing firms conduct about 70% of all private R&D. This implies that a significant portion of manufacturing’s output derives not only from assembly-line labor, but also from research, engineering, and design—higher-value tasks that will be affected by AI.

AI functions as a digital model of the real world that replicates physical assets and processes, serving as a virtual laboratory where real-world complexities are mirrored and experimentation and rapid prototyping are both possible and efficient. This setup enables controlled trial and error, with its probabilistic compute drawing on diverse data sources—text, images, video, chemical molecules, genes, code, sensor data, databases, and much more—to simulate and refine outcomes, thereby accelerating innovation across various industries.

This capacity for experimentation is already being applied across multiple fields. For example, AI is used to design innovative battery components, create new polymers, and deal with novel autonomous driving scenarios. An example of how AI can help tackle monumental challenges such as global warming, AI models excel at sifting through billions of possibilities among metal-organic frameworks—complex structures of metal ions linked by carbon compounds tailored to specific climates, such as humid sea-level versus dry, high-altitude conditions—to identify the optimal CO2-absorbing material, a task that would overwhelm human chemists.[6]

Industries such as semiconductor chip design, drug discovery, software development, and content creation are rapidly incorporating AI to enhance efficiency and innovation.

Although the inherent flexibility of AI’s probabilistic outputs may slow adoption in areas requiring exact precision, such as healthcare or finance, the world is inherently imprecise. We do not seek the perfect version of a movie, podcast, blog, or article, but rather we desire one that is “good enough” to fulfill our needs—whether to entertain, to illuminate the workings of a company, to complete a task, or to discover a molecule that mitigates illness. It is in this prevailing imprecision—where exactitude is the exception—that AI finds a vast canvas for experimentation, steering us toward ever more refined and effective solutions.

Getting to the Trillions

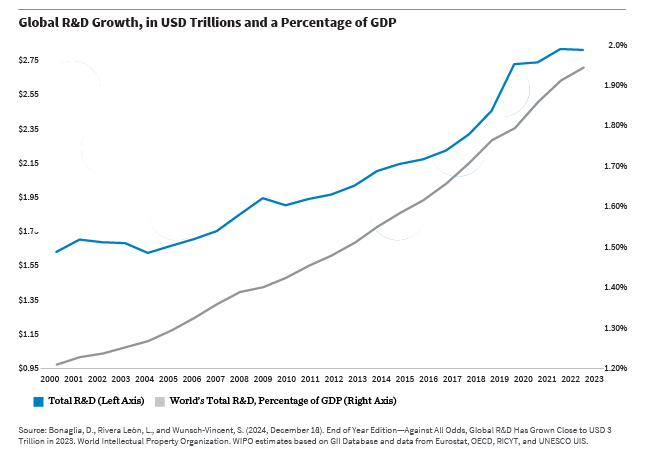

Any repetitive process that yields data suitable for pattern extraction naturally lends itself to systematic AI-driven experimentation. Today, industries such as semiconductor chip design, drug discovery, software development, and content creation are rapidly incorporating AI to enhance efficiency and innovation. Companies in these sectors are investing heavily in research and development, with global R&D spending reaching approximately $2.75 trillion annually[7]—a figure that continues to grow as a percentage of global GDP.[8]

As AI reduces the cost and increases the speed of experimentation, we can expect overall R&D expenditure to expand further, while the share driven by AI also rises significantly. In time, AI-enhanced research may come to dominate the R&D landscape, capturing an increasingly larger portion of this multitrillion-dollar investment.

The same economic forces that complement and replace labor are equally transforming software. The Moneyball revolution once upended baseball by redefining player valuation and team strategy through software; today, AI is not merely following those footsteps but is propelling the sport into a new era.[9] AI’s impact extends far beyond baseball of course. For instance, it directs Meta in tailoring content and advertisements for more than three billion users, underpinning $165 billion in revenue. In a dramatic shift, Tesla’s autonomous driving mission moved from years of investing in full self-driving (FSD) v1 through v11—systems based on hard-coded rules for performance and safety—to FSD v12, which employs iterative, efficient models that reduce C++ code and human intervention by a hundredfold while significantly enhancing performance and safety.

Yet, not every organization boasts visionary leadership. While tech giants have swiftly pivoted toward these innovations, many firms remain anchored in outdated practices. In the digital age, every organization will be affected by AI to varying degrees—some adapting rapidly, others being compelled to change by the relentless logic of capitalism, where creative destruction spares no one.

Getting to the Trillions

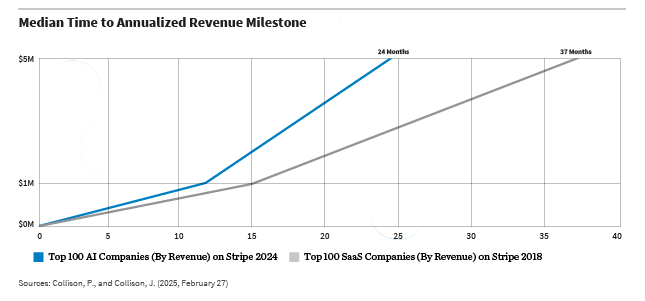

The transition from traditional software to AI is well underway, with AI already outpacing conventional software-as-a-service (SaaS) at this early stage of its journey.

As part of the financial ecosystem driving this change, Stripe’s 2024 annual report provides valuable insight, referring to “a large number of companies with rapidly growing businesses including OpenAI, Anthropic, Suno, Perplexity, Midjourney, Cognition, ElevanLabs, LangChain, Pinecone, Mistral, Cohere, Sierra, Decagon, Invideo, and countless others that aren’t yet household names (but may become so at any moment).”[10]

These emerging companies represent just a glimpse of the future being built on the foundation of AI. Consider that “Cursor, the AI-powered coding assistant, raced to over $100 million in recurring revenue in just three years.”[11] To describe it simply as a “coding assistant” is to diminish its economic resonance. It has ushered in what some now call “vibe coding,” enabling the creation of games in mere hours, a task that once demanded years of effort creating what now looks like a bloated gaming development industry. In this singular example, we witness how AI emerges not simply as a tool but as a force rewriting of our economic and software paradigms.

Worldwide, traditional software spending now exceeds $700 billion[12] and is forecast to reach $1 trillion[13] before the decade’s end. For decades, the Information Age has been built on the sturdy foundation of algorithms embedded in traditional software, woven ever more deeply into society’s fabric—now, AI is set to accelerate the role of algorithms in driving economic activity.

Even at this embryonic stage, AI has shown that when it models the complexity of the real world through data, it can be optimized to generate billions of dollars in savings. Shell’s PortXChange cut vessel idle time by 20%, Fortescue’s AI-driven energy use and mining operations trimmed power capacity costs by nearly $500 million, and UPS’s recalibrated delivery routes with AI slashed fuel expenses by hundreds of millions of dollars.[14]

Satya Nadella, CEO of Microsoft, has warned that business applications—essentially databases with embedded business logic—could be on the brink of collapse, to be replaced by AI agents capable of learning and executing complex logic which could upend conventional systems.[15] Chamath Palihapitiya’s critique of the $5 trillion Software Industrial Complex (SIC), which he contends inflates at 10% to 15% per year and has grown unsustainable over 30-plus years, is underscored by CIOs spending hundreds of millions on bloated solutions that drain margins[16]; in this critical juncture, any SaaS model that merely replicates low-context human tasks without incorporating deep, nuanced domain expertise or a human element is at risk of being replaced by AI.

In a global economy valued at $100 trillion, I believe AI is poised to command an ever-growing share of business and consumer workloads, propelling its economic influence into the realm of trillions.

Much remains to unfold. I believe that AI, as a general-purpose technology, will not only augment other technological breakthroughs, but also amplify their impact, driving economic value into the realm of trillions of dollars. Yet this enormous potential does not automatically translate into outsized investment returns, a subject that part two of this series will examine in detail.

If you’d like more information about how we’re thinking about AI in our portfolios, please reach out to our sales contacts.

Gurvir Grewal is a global research analyst on William Blair’s global equity team.

[1] Source: The Information, [2] Source: 13D Research & Strategy, WILTW, as of April 10, 2025, [3] Source: Our World in Data, [4] Source: U.S. Department of Defense, [5] Source: U.S. Department of Defense, [6] Source: The Economist, [7] Source: World Intellectual Property Organization (WIPO), [8] Source: World Bank, [9] Source: The Economist, [10] Source: Stripe, [11] Source: Stripe, [12] Source: Statista, [13] Source: Precedence Research, [14] Source: The Economist, [15] Source: Bg2 Pod, [16] Source: All-In Podcast.

Any investment or strategy mentioned herein may not be appropriate for every investor. There can be no assurance that investment objectives will be met. Products and services listed are available only to residents of this jurisdiction and may only be available to certain categories of investors. The information on this website does not constitute an offer for products or services, or a solicitation of an offer to any persons outside of this jurisdiction who are prohibited from receiving such information under applicable laws and regulations. Nothing on this webpage should be construed as advice and is therefore not a recommendation to buy or sell shares.

Please carefully consider the William Blair Funds’ investment objectives, risks, charges, and expenses before investing. This and other information is contained in the Funds’ prospectus and summary prospectus, which you may obtain by calling 1-800-742-7272. Read the prospectus and summary prospectus carefully before investing. Investing includes the risk of loss.

The William Blair Funds are distributed by William Blair & Company, L.L.C., member FINRA/SIPC.

The William Blair SICAV is a Luxembourg investment company with variable capital registered with the Commission de Surveillance du Secteur Financier (“CSSF”) which qualifies as an undertaking for collective investment in transferable securities (“UCITS”). The Management Company of the SICAV has appointed William Blair Investment Management, LLC as the investment manager for the fund.

Please carefully consider the investment objectives, risks, charges, and expenses of the William Blair SICAV. This and other important information is contained in the prospectus and Key Investor Information Document (KIID). Read these documents carefully before investing. The information contained on this website is not a substitute for those documents or for professional external advice.

Information and opinions expressed are those of the authors and may not reflect the opinions of other investment teams within William Blair Investment Management, LLC, or affiliates. Factual information has been taken from sources we believe to be reliable, but its accuracy, completeness or interpretation cannot be guaranteed. Information is current as of the date appearing in this material only and subject to change without notice. Statements concerning financial market trends are based on current market conditions, which will fluctuate. This material may include estimates, outlooks, projections, and other forward-looking statements. Due to a variety of factors, actual events may differ significantly from those presented.

Investing involves risks, including the possible loss of principal. Equity securities may decline in value due to both real and perceived general market, economic, and industry conditions. The securities of smaller companies may be more volatile and less liquid than securities of larger companies. Investing in foreign denominated and/or domiciled securities may involve heightened risk due to currency fluctuations, and economic and political risks. These risks may be enhanced in emerging markets and frontier markets. Investing in the bond market is subject to certain risks including market, interest rate, issuer, credit, and inflation risk. High-yield, lower-rated, securities involve greater risk than higher-rated securities. Different investment styles may shift in and out of favor depending on market conditions. Diversification does not ensure against loss.

Past performance is not indicative of future returns. References to specific companies are for illustrative purposes only and should not be construed as investment advice or a recommendation to buy or sell any security.

William Blair Investment Management, LLC is an investment adviser registered with the U.S. Securities and Exchange Commission.

Issued in the United Kingdom by William Blair International, Ltd., authorized and regulated by the Financial Conduct Authority (FCA), and is only directed at and is only made available to persons falling within articles 19, 38, 47, and 49 of the Financial Services and Markets Act of 2000 (Financial Promotion) Order 2005 (all such persons being referred to as "relevant persons").

Issued in the European Economic Area (EEA) by William Blair B.V., authorized and supervised by the Dutch Authority for the Financial Markets (AFM) under license number 14006134 and also supervised by the Dutch Central Bank (DNB), registered at the Dutch Chamber of Commerce under number 82375682 and has its statutory seat in Amsterdam, the Netherlands. This material is only intended for eligible counterparties and professional clients.

Issued in Switzerland by William Blair Investment Services (Zurich) GmbH, Talstrasse 65, 8001 Zurich, Switzerland ("WBIS"). WBIS is engaged in the offering of collective investment schemes and renders further, non-regulated services in the financial sector. WBIS is affiliated with FINOS Finanzomubdsstelle Schweiz, a recognized ombudsman office where clients may initiate mediation proceedings pursuant to articles 74 et seq. of the Swiss Financial Services Act ("FinSA"). The client advisers of WBIS are registered with regservices.ch by BX Swiss AG, a client adviser registration body authorized by the Swiss Financial Market Supervisory Authority ("FINMA"). WBIS is not supervised by FINMA or any other supervisory authority or self-regulatory organization. This material is only intended for institutional and professional clients pursuant to article 4(3) to (5) FinSA.

Issued in Australia by William Blair Investment Management, LLC (“William Blair”), which is exempt from the requirement to hold an Australian financial services license under Australia's Corporations Act 2001 (Cth). William Blair is registered as an investment advisor with the U.S. Securities and Exchange Commission (“SEC”) and regulated by the SEC under the U.S. Investment Advisers Act of 1940, which differs from Australian laws. This material is intended only for wholesale clients.

Issued in Singapore by William Blair International (Singapore) Pte. Ltd. (Registration Number 201943312R), which is regulated by the Monetary Authority of Singapore under a Capital Markets Services License to conduct fund management activities. This material is intended only for institutional investors and may not be distributed to retail investors.

Issued in Canada by William Blair Investment Management, LLC, which relies on the international adviser exemption, pursuant to section 8.26 of National Instrument 31-103 in Canada.

The content contained in this site is intended as informational or educational in nature and does not constitute investment advice or a recommendation of any investment strategy or product for a particular investor. Investment advice and recommendations can be provided only after careful consideration of an investor’s objectives, guidelines, and restrictions. Investors should consult a financial professional/financial consultant or investment adviser before making any investment decisions. Investing includes the risk of loss.

Copyright © 2026 William Blair. William Blair is a registered trademark of William Blair & Company, L.L.C. “William Blair” refers to William Blair Investment Management, LLC and affiliates.